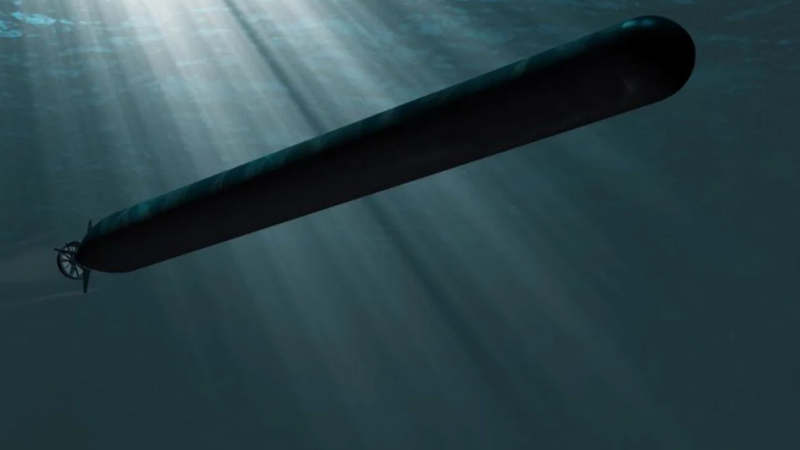

Image Courtesy: Lockheed Martin

Artificial intelligence and autonomy promise to be the future of civilian transport. But, it also appears to be making its way into military technology.

We have previously written about the US Army’s interest in eVTOL vehicles, but now there seems to be a push closer to home. The Royal Navy recently unveiled its interest in unmanned submersibles. What does this mean for the future of underwater warfare?

The Royal Navy recently awarded a £2.5 million contract to Plymouth-based MSubs, a leader in manned and unmanned submersibles. While the idea of an unmanned submersible is not exactly new – we have had remote-control submarines for many years – the big difference is the reliance on AI.

The Navy plans to develop an extra-large unmanned underwater vehicle (XLUUV) with an operation range of 3,000 miles and a run time of three months. The plan is that the boat will run itself, including navigation, investigation, and threat detection, although it will do so based on a given mission.

This idea is revolutionary and will require significant investment in machine learning technology. After all, submarine commanders go through years of training and rely on their instincts and experience to make important decisions. Teaching a machine to do this will take time and effort.

MarineAI plans to develop the submarine’s brain using a relatively simple Nvidia chip. It is capable of providing the necessary computing power, but more importantly, is incredibly efficient. This combination is vital for ensuring the best possible battery life for the unmanned boat.

Currently, the boat design relies on standard lithium-ion battery technology, but this will change as technology advances. Once we reach suitable energy density, there will be few reasons why an XLUUV would ever need to resurface.

While the idea is still in its early stages, we can already assume the type of missions an XLUUV would carry out. Primarily, it would take care of intelligence-gathering and reconnaissance and dangerous operations like mine detection and removal.

Across the Atlantic, the US Navy is working on a similar solution: the Orca. Developed alongside Boeing, it focuses on a modular design, meaning it is adjustable depending on the mission. The Orca is larger than its British counterpart: it is 16 metres in length and will have a range of 10,500km.

These early designs will be far from perfect. The biggest issue they face is artificial decision-making, particularly in the face of impactful military decisions. However, within the next 30 years, we will likely see a much larger rollout of unmanned submersible technology.

That said, it would never fully replace manned boats. For example, it would be unsafe and unethical to put AI in charge of the UK’s Trident programme. There will always be some missions that require human interaction, but unmanned submarines will be suitable for the more mundane and dangerous jobs.

AI in any context raises ethical questions; this is nothing new. What is new, though, is the thought of putting it in charge of an underwater vehicle with military capabilities. While there might be advantages to this, it certainly does not come without risk.

For example, submarine commanders have to make decisions that carry significant weight. Launching a torpedo could cause international war and is not a decision to be taken lightly. Putting such actions in the hands of AI removes the human emotional element, which is vital in these situations.

Based on machine learning and scenario algorithms, a computer might think launching a torpedo is the right thing to do, but this is very rarely the case.

A solution is to develop an AI version of the Navy’s Punisher programme, which is its submarine commander training. Inputting vast amounts of training data, including successes and mistakes, is an effective way of training a computer in intricate matters.

Of course, another ethical consideration is the potential reduction in submariner roles. This is a standard problem with AI systems, as more autonomy means fewer manned roles. However, if the XLUUVs will primarily undertake dangerous missions, it might not be as much of an issue.

Similarly, these jobs could be offered elsewhere in roles that require human intervention. These will always exist in military forces, and developing a fleet of unmanned submarines will mean more engineering roles.

The importance and benefits of XLUUVs outweigh the cons. Providing we can effectively teach the AI how to behave in sensitive situations, a fleet of unmanned submarines would be invaluable for intelligence gathering and dangerous missions. This, however, presents one of the biggest challenges. Until we are able to teach computers to think with human emotions, it is unlikely they could be trusted with weapons.